The size and complexity of cloud infrastructure have a direct relationship with management, maintainability and cost control of cloud projects. It might be easy to create resources using the Azure Portal or the CLI, but when there is a need to update properties, change plans, upgrade services or re-create all services in new regions the situation is more tricky. The combination of Azure DevOps pipelines and Terraform brings an enterprise-level solution to solve the problem.

The Infrastructure Problem

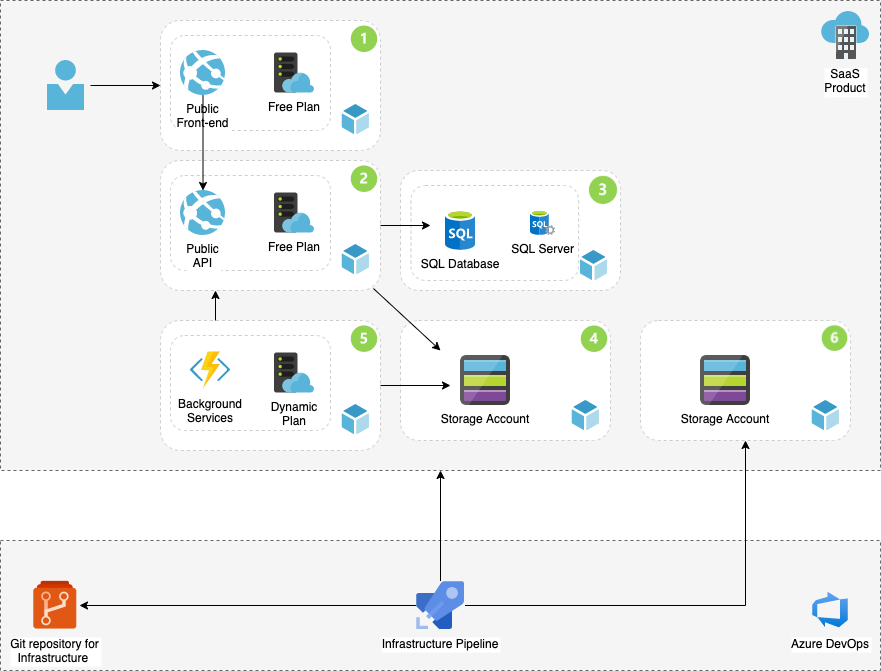

My previous blog post introduced a simple SaaS architecture which included App services, SQL database, storage account and background processes using Azure Functions. But why should we create automation for such a small environment?

In my project, the need started to rise as I was creating background processes for the SaaS platform. Let’s assume we have three Azure Functions to send emails, SMS messages and modify user pictures. Each Function will start using the storage account queue triggers. What if each Function has to use the API to write values to the database. For some reason, the path for the API changes, and It would be insane to manually update the URL of the new end-point in three places. Not to speak if the service is running in three Azure regions and no one should update any value manually in the production environment and without approval processes.

Azure Infrastructure using Terraform

There are few solutions to have Infrastructure as code using Azure DevOps Pipelines. You can always export Azure resource ARM templates and create the pipeline based on that, but I found it complicated and time-consuming. The second choice was to use the Azure CLI in pipelines and create resources, but the problem will be with maintenances, and the more resources you have the management of the script will be laborious.

The third option was to use third-party tools, and to be honest; I got in love with Terraform. The tool has providers for major clouds and of course for tools for Azure. The easiest way to start with the tool is to install the CLI on your computer and following the 12 sectioned learning path. Let’s add the supplement parts to the existing architecture:

The Explanations for items one to five are in my previous blog, so let’s concentrate on the new parts.

The New Components and the Build

Azure Infrastructure using Terraform and Pipelines requires knowledge of Terraform, and at this stage, I assume that you are familiar with the Terraform init, plan and apply terms. While running the Terraform locally, all configuration and metadata files will store on your computer hard disk. By moving the build to Azure DevOps Pipelines, there will be a need to store Terraform generated metadata and infrastructure code files.

Git repositories are a perfect solution to store Terraform infrastructure code files (.tf), and any other tools which are part of the pipeline. I have created a separated repository for my infrastructure in my project and named it InfraProvisioning.

To save the Terraform state and metadata files, we need different storage than GIT repository. Each Terraform execution will compare the current changes with the existing infrastructure and will override the existing environment. Azure Storage BLOB is a perfect location to save these files. The resource is marked as number six in the architecture diagram. But how should we automatically create the storage and include it as part of the automatic infrastructure?

I have created a Bash script which is for download from my Git Hub project. The script will login to the Azure using the CLI, create a Shared resource group, a storage account and BLOB storage. The Bash file is part of the build process and parametrised, but all parameters can be replaced with hard-coded values in the script. The Bash file is the initial part of the pipeline and how to implement the pipeline is the subject of my next blog post.